Natural Earth provides some of the best data for large scale mapping. It is clean, accurate, extensive, at a number of different scales, and best of all free.

To load the data it into PostGIS (PostgreSQL) we will use the vector tools provided in GDAL. Mainly ogr2ogr.

After downloading the data. I went for all of the vector data in ShapeFile format. First I need to generate a list of datasets and their respective file paths. This will be put into a spreadsheet and the command to load the data will be applied to each line, and finally it will be run using a shell script. Setting up a PostGIS database is covered in my previous post.

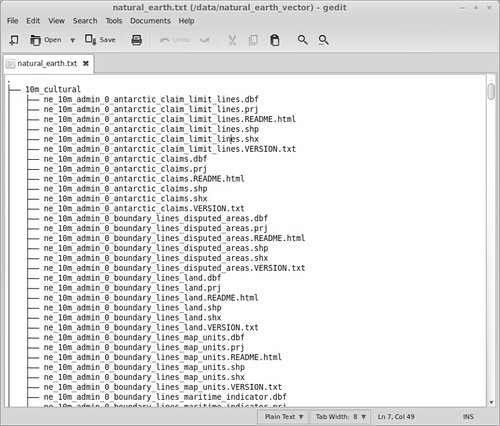

My Natural Earth data consisted of:

28 directories, 1472 files

So a little automation is needed. Interestingly there were also a few .gdb files “ne_10m_admin_1_label_points.gdb”. Those we can look into at a later date.

To begin:

ls > my_contents.txt

Produced a decent result, but not quite what I was looking for.

sudo apt-get install tree tree > natural_earth.txt

Was much better, although with a bit more tuning I’m sure ls would have achieved a better result.

After a bit of work in the spreadsheet, I had what I wanted. Perhaps not the most elegant solution, but certainly enough for my purposes.

Now for the ogr2ogr command:

ogr2ogr -nlt PROMOTE_TO_MULTI -progress -skipfailures -overwrite -lco PRECISION=no -f PostgreSQL PG:"dbname='natural_earth' host='localhost' port='5432' user='natural_earth' password='natural_earth'" 10m_cultural/ne_10m_admin_0_antarctic_claim_limit_lines.shp

Ogr2ogr is a file converter, which does so much more. In this case we are converting the ShapeFiles into tables in a PostGIS database. Essentially you want to copy the beginning part of the command in front of the files you want to load, changing only: “10m_cultural/ne_10m_admin_0_antarctic_claim_limit_lines.shp” .

Our settings:

-nlt PROMOTE_TO_MULTI | Loads all out files as if they were multi-part polygons. This means that a multi-part polygon wont fail the loading. This is a PostGIS requirement.

-progress | Shows a progress bar.

-skipfailures | Will not stop for each failure.

-overwrite | Overwrites a table if there is one with the same name. Our tables will be called whatever the ShaeFile is called since we are not specifying a name.

-lco PRECISION=no | Helps keep numbers manageable, especially with this data where precision is not important.

-f PostgreSQL PG:”dbname=’DatabaseName’ host=’IpAddressOfHost’ port=’5432′ user=’Username’ password=’Password'” | Details of the database where we are connecting to.

Now we are ready to run the commands. While ogr2ogr commands can be pasted straight into the terminal, for this task that is not really feasible. So we can create a simple shell script.

Copy the commands into a file and then:

sh your_commands

Finally there was one final error, with ne_10m_populated_places.shp. This was due to encoding. The encoding for the ogr2ogr tool can be changed from UFT8 to LATIN1 using:

export PGCLIENTENCODING=LATIN1;

After which the file loaded swimmingly.

Now for some mapping.

Thanks to:

http://lists.osgeo.org/pipermail/gdal-dev/2009-May/020771.html