The Ordnance Survey have begun to release their data as free to use. I think this is great, and despite what some say, I think the selection is good as well. I can see why MasterMap and AddressBase, their flagship products still cost money, there are some significant costs associated with producing them.

There is however still a bit of a barrier of entry, into using OS OpenData. This guide will hopefully make downloading the data a little bit easier.

OS OpenData needs to be ordered from:

https://www.ordnancesurvey.co.uk/opendatadownload/products.html

There is no cost, but a valid e-mail address is required.

For my purposes I started with OS Street View® and OS VectorMap™ District for the whole country.

You will receive an e-mail with the download links for the data. Unfortunately these are individual links since the data is split up into tiles. 55 tiles in total for the whole of the UK, so we want some way to automate the download of these links.

In comes curl, made by some nice Swedes. curl is a command line tool which will download the link provided.

Attempt number 1:

curl http://download.ordnancesurvey.co.uk/open/OSSTVW/201305/ITCC/osstvw_sy.zip?sr=b&st=2013-10-03T18:51:25Z&se=2013-10-06T18:51:25Z&si=opendata_policy&sig=YOUR_UNIQUE_CODE_HERE

Result:

[1] 20182 [2] 20183 [3] 20184 [4] 20185 [2] Done st=2013-10-03T18:51:25Z vesanto@hearts-lnx /data/OS $ResourceNotFoundThe specified resource does not exist. RequestId:54953719-5e43-4791-aa60-51dee61f27ac Time:2013-10-03T19:20:04.3403546Z

Not quite there, missing the quotes:

curl 'http://download.ordnancesurvey.co.uk/open/OSSTVW/201305/ITCC/osstvw_sy.zip?sr=b&st=2013-10-03T18:51:25Z&se=2013-10-06T18:51:25Z&si=opendata_policy&sig=YOUR_UNIQUE_CODE_HERE'

Which was a success, but it started downloading the file into the terminal (ctrl+c stopped it):

So we need an output file as well:

curl 'http://download.ordnancesurvey.co.uk/open/OSSTVW/201305/ITCC/osstvw_sy.zip?sr=b&st=2013-10-03T18:51:25Z&se=2013-10-06T18:51:25Z&si=opendata_policy&sig=YOUR_UNIQUE_CODE_HERE' -o osstvw_sy.zip

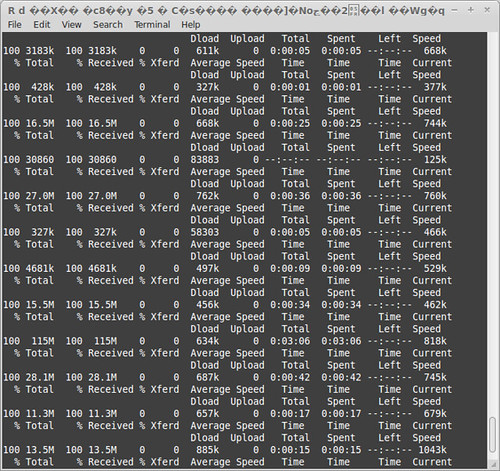

Success:

To run this in bulk, just format the commands in LibreOffice (Excel) and create a simple shell script.

Copy the commands into a file and then:

sh the_file_containing_your_commands

To load the downloaded data into PostGIS, see my previous post on loading data into PostGIS.

Also a few tips for newer users of OS OpenData:

They are all in the British National Grid Projection (EPSG:27700). Watch out for this in QGIS. Version 1.8 used the 3 parameter conversion while 2.0 uses the 9 parameter. This means that if you created data in QGIS 1.8 and are now using 2.0, your data may not line up if you have “on the fly” CRS transformation turned on. To fix this just go into the layer properties and re-define the projection as British National Grid (EPSG:27700) for all the layers.

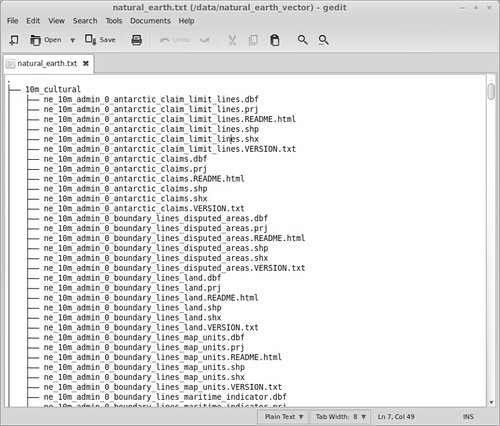

If you are working with Raster data you need to copy the .tfw files into the same directory as the .tif files. The .tfw (tiff world files) tell the .tif image where they should be in the world.